[ad_1]

Andy Walker / Android Authority

TL;DR

- Pretend profiles are gaming the Google algorithm utilizing AI to make Quora solutions in style and promote affiliate merchandise.

- These profiles have achieved a excessive rating on Quora and, by it, on Google, probably spreading AI-generated misinformation on medical recommendation and phrases.

It’s at all times higher to hunt medical consideration each time you might have any worrying signs. That mentioned, making an attempt to get some info on what might look like minor signs when you wait till your subsequent physician go to will help calm some nerves. Sadly, it appears spammers and scammers are gaining additional attain with current Google algorithm updates.

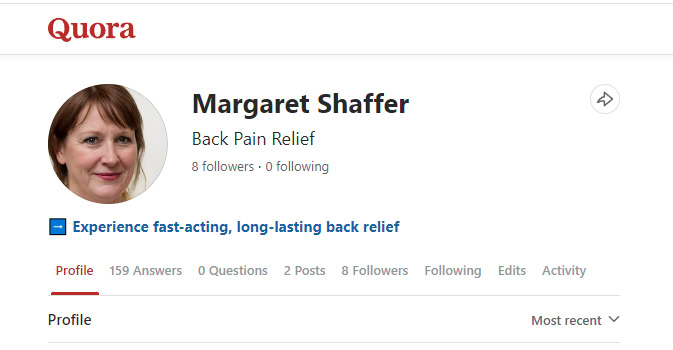

search engine marketing professional Ori Zilbershtein not too long ago came across some worrying proof of what could also be turning into a misinformation epidemic. As an lively particular person, Zilbershtein claims he regarded for information on neck and again ache, which he has been affected by. Certainly one of Google’s high outcomes got here from a faux Quora profile. The account was created and managed utilizing synthetic intelligence, one thing that turned very worrying, provided that that is medical recommendation and data.

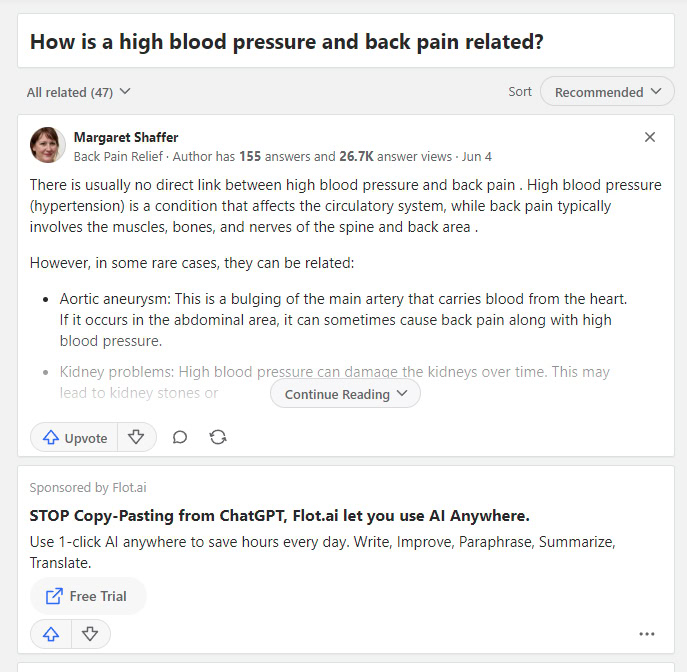

After digging round, he discovered the profile was created on June 1, 2024. The picture was apparently made with the ThisPersonDoesNotExist.com web site, which is an AI picture generator that creates photos of faux people. Since then, this particular account has answered 159 questions with AI-generated textual content responses to person questions. All of those posts had been between 240 and 300 phrases in size. The content material generated over 26,000 views.

This isn’t an remoted case, as Ori additionally discovered a number of different very related faux profiles, in addition to a a lot wider set of accounts used to upvote content material and enhance the percentages of this content material rating greater on Google.

Apart from spreading what could be thought of unreliable medical info, Ori Zilbershtein additionally found there was a really clear ulterior motive for all this AI search engine marketing wizardry. It appears all solutions included a hyperlink to the faux account’s article, which recommends a questionable “various cream product” that claims to assist with again ache. In fact, it’s an affiliate hyperlink that generates earnings.

The primary situation seems to be with Google’s newest algorithm updates beginning September 2023. These adjustments prioritize opinions and private experiences extra, particularly by exhibiting them within the ‘Discussions and Boards’ part inside searches. Such private content material is very similar to what you discover in questions and solutions websites like Reddit and Quora. The problem is that Google appears to have utilized many of those adjustments throughout all matters, which can not make sense in one thing like medication.

In relation to well being, finance, and YMYL, it would not matter what the person likes, it issues what the reality is. Ori Zilbershtein

With all that in thoughts, Google and the neighborhood face a brand new situation that was nonexistent earlier than these Google algorithm updates. Earlier than these updates, the inducement to indicate up in Quora was tiny, and the neighborhood wasn’t that massive. Now that Quora content material has a great probability of exhibiting up in Google’s high outcomes, some shady persons are making an attempt to use it.

So, subsequent time you search for medical info, simply be cautious of the place it comes from. Quora isn’t as managed or moderated as different web sites, and even Reddit. This implies individuals can join an account after which do no matter they want with little to no friction. At all times seek the advice of an precise skilled. And in the event you’re going to do some on-line analysis, at the very least attempt to discover extra respected sources, like Mayo Clinic and WebMD.

[ad_2]

Source link

/cdn.vox-cdn.com/uploads/chorus_asset/file/25524175/DSCF8101.jpg)